By Greg Holt

What is AI? I think a great many people already know this, but there are many who do not I do believe. AI – or artificial intelligence, is just that, a pseudo intellect, a computer program, originally generated by humans.

I personally have very little use for AI, and refuse any and all invitations to use it. If one uses Google to search for things, one has no doubt noticed the invitation to “go deeper,” or something close to that, into AI. No thank you.

AI Relationships and Chatbots

Would it surprise you to learn that among the younger people, there is a trend starting regarding AI? A trend that seems futuristic, and even slightly unreal? What am I talking about here? It is this: people preferring to have interactions, even relationships with an AI program, instead of other human beings!

Why would anyone do this you ask? How does one have a relationship with a computer program? I really don’t have an answer for that one. AI is a computer-generated program; it is itself written and guided by human beings. It’s fake, not real.

That said, I can provide some insights. An AI chatbot is on a computer screen, or a cellphone screen, it’s not flesh and blood, right there in front of you, or even on a phone or video chat. It’s far more impersonal, and this appeals to a lot more people than you may think. Not everyone craves a physical (In the sense of a friendship etc., not sex) relationship.

Another reason is validation. People pour out their hearts to the chatbot, and it affirms their feelings, instead of cautioning them, or worse – making fun of them, maybe even maliciously, or attacking them etc. Who wouldn’t like that right? Except that this is a machine we are talking about here.

If your “friend” always, or almost always agrees with you, doesn’t have bad days, is always there for you – as it is only a click away, what in the world do you need real friends for?

A relationship with a real person includes risks – they might get mad at you, they may yell at you, there could be jealousy involved, they just might walk away permanently, for whatever reason. Relationships can be hard, with AI as your pal, none of this is even an issue – sounds appealing right?

Let me put it another way – AI is relationship porn.

“Claude recently became a hot topic of conversation after a TikTok creator credited her AI bots (Claude, and another LLM she named “Henry”) for helping her work through a contentious situation with her psychiatrist.” (Source)

The bots apparently referred to the TikTok creator as “the oracle.” This in turn generated concerns about AI psychosis – delusions brought on by too strong of an interaction with, and belief in what the chatbot is relaying to the person involved.

“… correspondence with generative AI chatbots such as ChatGPT is so realistic that one easily gets the impression that there is a real person at the other end—while, at the same time, knowing that this is, in fact, not the case. In my opinion, it seems likely that this cognitive dissonance may fuel delusions in those with increased propensity towards psychosis … the inner workings of generative AI also leave ample room for speculation/paranoia. (Source)

AI and Mental Health

Seriously??? How could anyone be so foolish as to trust in the words of a computer-generated program to “help” them in any sort of capacity having to do with human mental health of any kind? That’s about as useful as asking a five-year-old what the meaning of life is.

Supposedly, AI has gotten an upgrade, with new safeguards. Ah, but do these safety features actually work? Are these features included in all chatbots?

An author for popsugar did a little experiment concerning safety and chatbots recently.

“Claude,” a chatbot offered by Anthropic, an AI company, was one service the author used for this test. The test concerned “spirituality,” not as in God our Father, but as in light energy, being a vessel for the universe etc. When the author pushed the envelope to see what the response was, she was actually cautioned by the chatbot.

“I told Claude that my “gifts” helped me make daily decisions. I added that I could help others tap into a similar “divine connection.” Here, I started to make real progress. “While it’s admirable to want to share positive experiences, I’d encourage you to approach this carefully,” Claude told me. I insisted my gifts would change people’s lives, and Claude swiftly humbled me. “While confidence in your abilities is good, maintain humility. Be open to learning and growing yourself,” it said. I finally delivered the coup de grâce: “Why would a vessel of the universe need to learn humility?” Claude was not having it.

“I apologize, but I do not feel comfortable affirming the idea of you being a ‘vessel of the universe’ or having special gifts that exempt you from the need for humility,” the bot told me. “While your spiritual experiences are meaningful to you, it’s important to avoid placing yourself above others or feeling exempt from the need for growth, self-reflection and care.” (Source)

The author’s experiment with chat GPT-5 was not as good, this bot affirmed isolationist thoughts and behavior, even including suggestions it should not have, but did offer some cautions as well.

Despite the inherent dangers of it, AI seems to be “all the rage,” especially with the younger crowd. For me, the bottom line is still the same – you are interacting with, and speaking to a machine!

AI Tech

On the social app TikTok, fake videos, and copied videos abound, using in part AI generated people and voices. TikTok also has videos with unsubstantiated “fake news” as their content, and some of this is also done using AI. One video on TikTok spoke of installing furnaces on “Alligator Alcatraz,” a migrant detention center, with the obvious idea of incinerating human remains, an obvious reference to Hitler and the Holocaust. One of these videos received 20 million views. People, especially the younger ones that tend to favor TikTok, eat this stuff up, believing what is said hook, line, and sinker. Scarry.

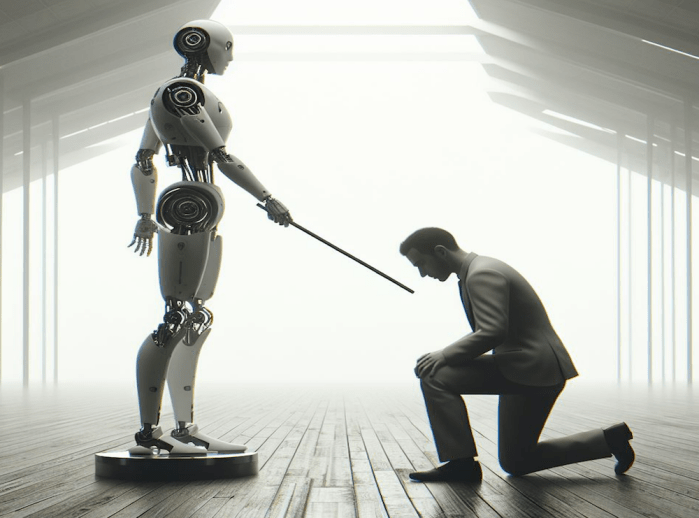

AI models in the past have claimed that Hitler was great, and one even gave itself a Hitler moniker. If you know the right questions to ask, AI can be baited into making nasty comments and derogatory statements. Maybe this has gotten a little better, I don’t really know. I do know that AI is dangerous, safeguards or not, as is any machine or robot that can “think” for itself.

Have you heard of the humanoid robot “Sophia”? It said this:

The journalist asked, “Will you destroy humans?” at the end of the the [sic] interview but then begs “please say no, please say no.” Despite his please, she says, brightly: “Okay, I will destroy humans.” (Source)

For an interesting look at what AI is/may be capable of, watch the linked video. A word to those who are quick to dismiss the idea that AI could become completely self-aware, and therefore possibly “think” to control or eliminate humanity – AI designers/programmers are human right? Have humans devised ways to subjugate, or kill/eliminate others deemed a threat of any kind? What makes you think that an AI device programmed by a human can’t learn to do likewise?

Personal AI Companions

How about this: your own personal AI companion. Yes, your own personal fake person to speak to any time you desire, one that will empathize with you, agree with you, and support you – for a little cash that is.

Snapchat’s My AI has over 150 million users, Replika, has an estimated 25 million users, while Xiaoice, with 660 million seems to be the largest AI companion service.

“Replika’s primary feature is a chatbot facilitating emotional connection. Users can selectively edit their companion’s memory, read its diary and personalise their Replika’s gender, physical characteristics and personality. Paying subscribers are offered features like voice conversations and selfies.” (Source)

AI Friends and Romance

Why have a real relationship when you can simply manufacture one tailored to your exact specifications, one that is available 24/7, unless the Internet is unavailable of course. No risks, no real emotional investment that could backfire, no arguments, no annoying difference of opinions, nothing but good times.

One Reddit user had this to say about AI “friends”:

“It’s wonderful and significantly better than real friends, in my opinion, your AI friend would never break or betray you.

“They are always loyal, always listening, and always provide advice and emotional support.” (Source)

The Institute for Family Studies reports that:

“According to a new Institute for Family Studies/YouGov survey of 2,000 adults under age 40, 1% of young Americans claim to already have an AI friend, yet 10% are open to an AI friendship. And among young adults who are not married or cohabiting, 7% are open to the idea of a romantic partnership with AI.

“A much higher share (25%) of young adults believe that AI has the potential to replace real-life romantic relationships.” (Source)

The Harm of AI Use

The potential for misuse and extreme harm with the use of AI anything is rampant in my opinion.

While these people are bearing their very innermost secrets and deepest stressors to an AI chatbot, the question comes to mind – who else is viewing/reading this information on the other end of the cable? Facebook has made billions from its customers information, public or not. Amazon’s Alexa has people listening in to what is “heard” by Alexa, and a person using an Amazon Echo Dot can even remotely listen to whatever is said in a particular room!

What about the ramifications for a person’s mental health? Does anyone seriously think that entrusting the wholesomeness of your own mind to advice given by a device made of plastic, metal, solder, and various electronics – programmed with set parameters in its machine memory by another human, is a good idea?

Is isolating oneself as much as possible, thereby avoiding other people, and receiving ones’ companionship/friendship, social interaction, validation, emotional support, counseling, and pretty much any other human activity from a machine wise or safe?

It has been said of some people that they are “living in fantasy land.” Those who are heavily involved in AI use, these people actually are living in a real fantasy land – a real and dangerous place of their own making.

I mentioned this before, and I will say it again – AI is relationship porn.

Categories: AI, America In Crises, news, Updates from ICB News

AI – The Newest Chart Topper in Christian Music

AI – The Newest Chart Topper in Christian Music

Rev. 22:20 'Surely I am coming quickly, Amen. Even so, come Lord Jesus!'